Difference between type 1 and type 2 errors

In statistical test theory, the concept of a statistical error is an integral part of hypothesis testing. The Hypothesis test is about choosing between the two hypotheses, the Null Hypothesis or Alternative Hypothesis. The Null hypothesis is presumed to be true until the data provide convincing evidence against it.

What is Hypothesis Testing and How to do Hypothesis testing

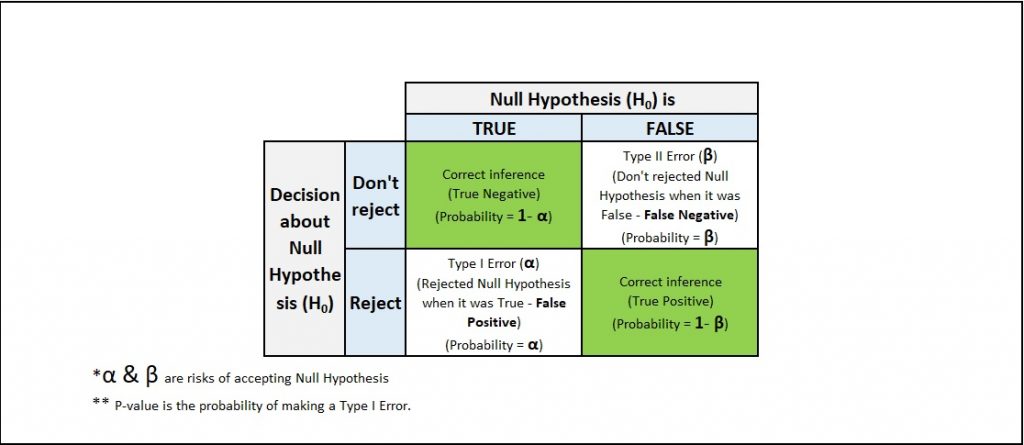

Any hypothesis testing is not 100% accurate. There will be scope for some error. There are two types of error that can occur. These errors are :

- Type 1 and

- Type 2.

In the above image we can see there are four different boxes out of these four, two are about correct decision and two are errors.

Correct Inferences means we didn’t reject the null hypothesis when it was True or rejected the null hypothesis when it was false.

Difference between type 1 and type 2 errors

Understanding Type I error

Type I error also known as false positive or alpha level(α) is the probability of rejecting the null hypothesis when the null hypothesis is true. This is like we are convicting someone when in actuality he is innocent. So a tester validates a statistically significant difference in data sets when actually there is none.

Type I error has the probability of α correlated to the level of confidence(significance level) that an organization sets as per their capability of taking the risk. This significance level implies that it is acceptable to have an x% probability of incorrectly rejecting the true null hypothesis. So when we say that the decision is made at the 95% confidence interval it means that we are taking a chance of 5% error of Type I.

Understanding Type II error

Type II error also known as the false negative or beta error is the probability of accepting the null hypothesis when the null hypothesis is not true. This is like concluding that someone is not guilty when in fact they really are. In other words, we are stating that there is no statistically significant difference in data sets when in actuality there is.

The probability of a type 2 error is determined by β. Beta depends on the power of the test, the probability of not committing a type 2 error, which is equal to 1-β.

There are 3 parameters that can impact the power of a test:

- Our sample size (n)

- The significance level of our test (α)

- The “true” value of your tested parameter.

Both the errors will lead us to false assumptions and poor decision-making that can result in a reduction in sales, a decrease in profit, or a decrease in customer satisfaction. Also, these two types of errors are trading off against each other for any given sample set. The effort to reduce one type of error generally results in increasing the other type of error.

That was all about difference between type 1 and type 2 errors will meet again with some new topic.

You can read more on difference between type 1 and type 2 error from this link.